Stepper: A Lightweight PowerShell Utility Module for Creating Resumable Scripts

Hello, friends!

It’s been a while since I’ve written because… 🤷. I have a few good ideas in the draft folder, but writing hasn’t felt super interesting for a while. However, that has recently changed because I wrote a tool!

If you want to read about the tool, jump to the tool description.

If you want to peruse the source (and mock my coding), head over to the GitHub repo

And if you want to download the whole module and play, you can grab it from the PowerShell Gallery right now:

Install-Module -Name Stepper -Scope CurrentUser -Force

# or

Install-PSResource -Name Stepper -Scope CurrentUser

Alright… on to the back story.

Long-Running PowerShell Scripts Suck

Imagine this: you’re a PowerShell-adept sysadmin tasked with collecting a ton of data from your workstation fleet and putting it into a report. You write a bunch of quick and dirty one-liners that collect everything you need and spit it into a pretty Excel workbook. Your manager thinks it’s great and asks you to start creating the report every month.

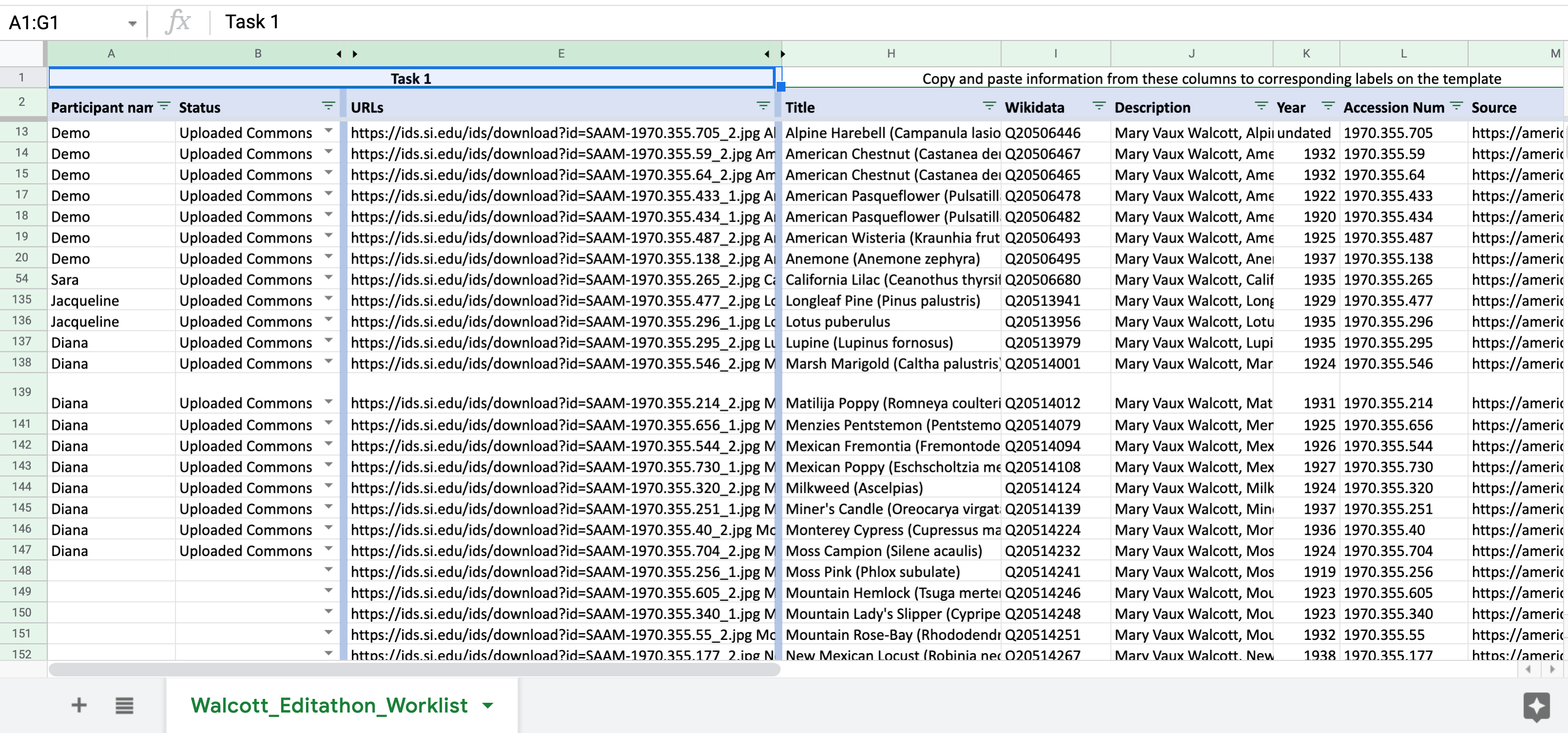

Source: [https://commons.wikimedia.org/wiki/File:WikiProject_Smithsonian_AWHI_Mary_Vaux_Walcott_Illustrations_Edit-a-thon_-_spreadsheet_01.png

Source: [https://commons.wikimedia.org/wiki/File:WikiProject_Smithsonian_AWHI_Mary_Vaux_Walcott_Illustrations_Edit-a-thon_-_spreadsheet_01.png

Being PowerShell-adept, you realized the beauty of automation many years ago. You build a script that runs perfectly on your local machine and in your test lab of five (5) virtual machines. The script has reasonable defaults, good error handling, and completes in a reasonable time per machine.

Unfortunately, when you actually run the script against your production network, you realize you forgot to build error handling into ALL the spots where the script could fail. To add insult to injury, there are certain tools used in the script that you did not write and cannot update if/when you identify problems. Worst of all, running your script against 500 machines over a real network takes WAY longer per machine than your 5 lab machines took due to latency and connection quality. But your boss’ boss really likes the report and begins to expect it on a weekly basis.

Of course, they don’t give you the time you need to actually make your script fully resilient… <s>That would be a waste of time!</s>

So once a week, you’re stuck babysitting a script that theoretically should be able to run on its own. Eventually, you realize it’s probably easier to break the script into multiple smaller scripts which saves data to files. If one of the small scripts fails, you can re-run that little script on its own without losing your previously completed work.

But now you have a different problem set:

- How do you move data between these small scripts?

- What happens when the steps are run out of order?

- If the steps absolutely must run in order, how do you track that order?

I am currently living this life. (Except the “time to improve” bit… I got that!)

Complex Toolsets

At my current employer, we take an interesting approach to Active Directory Security Assessment tooling. In contrast with my previous employer, we do not build our toolkit 100% in-house. We use a healthy mix of in-house tools and common open-source security tools. When I joined, I was a bit taken aback by this approach, but I quickly saw the benefits. We can build detections for emerging threats fairly quickly, and when tools fail, there are communities to ask for support.

However, using such a mix of tools comes with some issues. Each tool has its own output format, so some of the processing effort is focused on manipulating data instead of working with it. Plus, the goals of open-source developers sometimes do not align with our goals, so issues we raise and features we request don’t get integrated into the mainline releases of the projects for various reasons.

Thankfully, my team is comprised of an amazing group of consultants with a very wide range of development skills who have come up with a toolkit that ties together the various tools to produce really high quality data for consultants to work with. Unfortunately, this toolkit has developed organically over multiple years by multiple developers, which means processing data is not simple or consistent. There are many moving parts that could be run out of order and result in inconsistent data.

Most Tools Come From Solving Your Own Problems

Of course, as the new guy full of hubris, I was able to clearly see the solution to this problem very soon after joining. I would build a “controller” script that loads and runs the other tools in the correct order! Why didn’t the others see this solution already? THIS SHOULD BE A PIECE OF CAKE.

I built my controller script. I tested it. It worked well in my lab! But soon after, I got slapped with reality. One of the steps in the middle of the process requires customers to complete a questionnaire. In testing, I am the customer, and I can quickly answer the questionnaire with random BS.

But in the real world, getting this questionnaire back from the customer could take anywhere from 10 hours to 10 weeks. Did I want to leave a script sitting idle in my terminal for 10 weeks? What happens if Windows Update forces a reboot? What happens if I get overwhelmed with open tabs and close everything? How could I resume the script and not repeat work already completed?

The first way I tried to solve this issue was -Skip<Section> switches. For example, if I’ve already set up a workspace, I could use -SkipWorkspaceSetup and go right past that part of the script! This works fine, but it requires me to remember:

- which sections I’ve completed.

- the switches required to skip those sections. I’m terrible at remembering such things unless I take notes, but I’m also bad at taking notes. le sigh

Even worse, if any step in this process requires information from one or more previous steps, those previous steps need to be executed to ensure all the right data is in memory.

Do anything out of order, and you’re better off starting over fresh.

Stepper v0.0.0.0.0.0.01

Thankfully, computers ARE good at remembering things like state and can write important data to disk for later use. I envisioned a module that would allow me to split my giant script into smaller scripts, save each baby script’s data to disk between runs, and use a tiny orchestrator script to manage it all. And thus, the first version of Stepper was born in October.

The first version required multiple files like this:

Script.ps1

Script.ps1.json

Script.ps1.stepper

Steps

└── Step1.ps1

Step2.ps1

Step3.ps1

Here’s what each bit did:

Script.ps1the “orchestrator” which loadedScript.ps1.jsonfile to get info about the steps and initialize theScript.ps1.stepperfile if it didn’t exist.Script.ps1.jsondefined each step with a name, a description, and the location of each of the smaller scripts.Script.ps1.stepperheld information about the last completed step, along with data to be passed between steps.Steps/Step#.ps1the actual work to be performed.

This worked well enough once set up, but actually setting everything up correctly absolutely sucked. If you already had a big script, you had to:

- break it into multiple individual script files

- sort out which variables were created in which step

- determine which data you wanted to persist between steps

- add parameter blocks and returns to each mini-script

Don’t forget: if you screwed up somewhere, it was impossible to quickly troubleshoot. Starting from a blank slate was a little easier, but it still required way too much thought.

You know what? I’m not even going to give any more details. This process sucked so hard I never want to think about it again. I knew there had to be an easier way.

Inspiration from Prior Research

Many years ago, when I first learned the word “DevOps”, I learned about something called PowerShell Workflows. It seemed useful, but I was very early in my PowerShell journey and didn’t fully grasp many of the concepts, let alone the execution. Then, when I realized Workflows were not supported in PowerShell Core, I forgot about them completely.

But as I began reading about resumable processes again, Workflows came up again. And that’s when I saw the syntax Workflows use:

Parallel {

<Activity1>

<Activity2>

Sequence {

<Activity3>

<Activity4>

}

}

<Activity5>

Which looks shockingly similar to the syntax psake uses:

# psakeFile.ps1

Task Default -Depends Test, Build

Task Build -Depends Clean {

Write-Host "Building project..." -ForegroundColor Green

dotnet build -c Release

}

Task Test {

Write-Host "Running tests..." -ForegroundColor Cyan

dotnet test --no-build

}

Task Clean {

Write-Host "Cleaning output..." -ForegroundColor Yellow

Remove-Item ./bin -Recurse -Force -ErrorAction Ignore

}

I know it’s weird that syntax could inspire such a drastic change in approach for a module, but I knew if I designed Stepper to require as little thought and planning as possible, I’d be much more likely to use it! So I started again from scratch with the focus on simplicity of use. After a few weeks of work, I think I’ve landed on something neat, but I’ll let you be the judge.

Introducing Stepper

Stepper takes the PowerShell Workflow idea and REALLY dumbs it down.

Stepper takes the PowerShell Workflow idea and REALLY dumbs it down.

It is not designed to orchestrate multi-system processes.

It does not support parallel activities.

It’s solely focused on creating resumable scripts that allow you to restart a failed or stopped script with a minimal amount of repeated work.

It does this by storing information about the state of the script in a .stepper file stored alongside the script. Each time you run a script that utilizes Stepper’s functionality, it will check for a .stepper file in the same directory as the script. If such a file exists, all data from the previous run of the script will be loaded into memory, and previously executed code will be skipped over. If the .stepper file does not exist, a new one will be created.

To utilize Stepper in your scripts, you only have to do one thing:

- Wrap a single block of code in

New-Step {}.

Once wrapped, this block of code is now a “Step”. A Step may be a single line of PowerShell, a thousand lines or PowerShell, an external script, etc. If the logic has a distinct beginning and end, it’s a Step.

More importantly, you only have to think about two things:

- What are the logical “Steps” in the script?

- Is data from one Step needed in other Steps in the script?

If you can answer these questions about your script, you can use Stepper. Let’s see how!

An Example Script

I find it’s easiest to explain Stepper via real-world(-ish) examples. The completely fictitious script below gets a list of all new users on a system, creates personal storage for them, and then creates a usage report used for billing purposes. It’s scheduled to run once weekly:

# Get all users of a system that don't have personal storage.

# Typical runtime: 10 seconds

$NewUsers = Get-SystemUser -All | Where-Object HasPersonalStorage -eq $false

# Create personal storage for all new users on the big server.

# Typical runtime: 1 hour

$NewUsers | New-PersonalStorage -Computer BigServer

# Get data about personal storage usage.

# Typical runtime: 6 hours

$PersonalStorageUsage = Get-PersonalStorage -Computer BigServer | Select-Object User, Path, LastBackup, PercentUsed

# Export report about personal storage usage:

# Typical runtime: 10 minutes

$PersonalStorageUsage | Export-Excel .\PersonalStorageReport.xlsx

In this obviously contrived example, any of the lines could fail. For example, what happens if the big server goes offline during this step and the script errors out?

$NewUsers | New-PersonalStorage -Computer BigServer

Run the script from the beginning, right? But how do you know if HasPersonalStorage is accurate? What if the New-PersonalStorage cmdlet writes that property to the user object before the personal storage is fully created?

Yes, that’s a logic error, but it’s not uncommon to encounter such an error in real life. If this script crashed while running New-PersonalStorage, you could end up with users that appear to have personal storage but actually don’t!

What if Windows Terminal crashes during this line?

$PersonalStorageUsage | Export-Excel .\PersonalStorageReport.xlsx

Since $PersonalStorageUsage was only kept in memory, you’d have to re-run the previous step… the one that says Typical runtime: 6 hours. Yuck. Also, if this is for billing purposes, re-running the whole process may result in over- or under-billing.

Let’s Get Steppin’!

This is what the script looks like after making it resumable:

[CmdletBinding()]

param ()

#Requires -Modules Stepper

# Get all users of a system that don't have personal storage.

# Typical runtime: 10 seconds

New-Step {

$Stepper.NewUsers = Get-SystemUser -All | Where-Object HasPersonalStorage -eq $false

}

# Create personal storage for all new users on the big server.

# Typical runtime: 1 hour

New-Step {

$Stepper.NewUsers | New-PersonalStorage -Computer BigServer

}

# Get data about personal storage usage.

# Typical runtime: 6 hours

New-Step {

$Stepper.PersonalStorageUsage = Get-PersonalStorage -Computer BigServer | Select-Object User, Path, LastBackup, PercentUsed

}

# Export report about personal storage usage:

# Typical runtime: 10 minutes

New-Step {

$Stepper.PersonalStorageUsage | Export-Excel .\PersonalStorageReport.xlsx

}

Stop-Stepper

Now let’s break down the changes:

New-Step {}any code wrapped in this block is resumable. If the script dies, errors out, crashes, is unexpectedly stopped, etc., during a resumable code block, the script will restart at this block on the next run.Stop-Steppercleans up the.stepperfile after successful completion of the script.$Stepper.<Variable>represents data that will be shared between steps. Additionally, if a Step finishes successfully, all data in a$Stepper.variable will be written to disk for use in subsequent resumed runs of the script.[CmdletBinding()],param (),#Requires -Modules Stepperare all recommended but not required additions to a Stepper script. It makes scripts act more like cmdlets and allows the use of Common Parameters.

Let’s revisit our previous examples to see how things change, hopefully for the better?

What happens if the big server goes offline during this step and the script errors out?

New-Step {

$Stepper.NewUsers | New-PersonalStorage -Computer BigServer

}

When you rerun, you will be presented with the following:

~/iCloud Drive/Downloads

PS7> ./Example.ps1

[!] Incomplete script run detected!

Total Steps: 4

Steps Completed: 1

Variables: NewUsers

Last Activity: 2025-12-29 13:55:37

How would you like to proceed?

[R] Resume Example.ps1 from Line 13 (Default)

[S] Start over

[M] More details

[Q] Quit

Choice? [R/s/m/q]:

Press Enter or R, and you’ll resume at Step 2!

Choice? [R/s/m/q]: r

Resuming from Step 2...

And what if Windows Terminal crashes during this line:

New-Step {

$PersonalStorageUsage | Export-Excel .\PersonalStorageReport.xlsx

}

You get this:

~/iCloud Drive/Downloads

PS7> ./Example.ps1

[!] Incomplete script run detected!

Total Steps: 4

Steps Completed: 3

Variables: NewUsers, PersonalStorageUsage

Last Activity: 2025-12-29 13:56:37

How would you like to proceed?

[R] Resume Example.ps1 from Line 25 (Default)

[S] Start over

[M] More details

[Q] Quit

Choice? [R/s/m/q]:

Press Enter or R, and you’re right back to creating the final report. No six hour wait!

But suppose you don’t remember what these steps are, or whether you should resume them? Let’s check more details w/ M:

Choice? [R/s/m/q]: m

More details:

Current script hash: F06698A9429AC0C27857CC76AF75E6AD268CCDD6106A41B3A0AFA1485938B883

Stepper variables:

NewUsers: null

PersonalStorageUsage: null

Last completed step:

New-Step {

$Stepper.PersonalStorageUsage = Get-PersonalStorage -Computer BigServer | Select-Object User, Path, LastBackup, PercentUsed

}

Context around next restart line:

23: # Export report about personal storage usage:

24: # Typical runtime: 10 minutes

25: New-Step {

26: $Stepper.PersonalStorageUsage | Export-Excel .\PersonalStorageReport.xlsx

27: }

28:

How would you like to proceed?

[R] Resume Example.ps1 from Line 25 (Default)

[S] Start over

[M] More details

[Q] Quit

Choice? [R/s/m/q]:

In this pane, you can see the last completed step and the next step to be performed!

Using Stepper, you no longer need to fear long-running scripts!

Some Other Cool Features

When you first run a Stepper script, it will check to make sure the script contains everything you need to succeed.

~/iCloud Drive/Downloads

PS7> ./Example2.ps1

[!] Script requirements check for Example2.ps1:

Missing [CmdletBinding()] declaration

Missing #requires -Modules Stepper statement

How would you like to handle this?

[A] Add missing declarations (Default)

[S] Skip

[Q] Quit

Choice? [A/s/q]:

Choose A and see:

Choice? [A/s/q]: A

Declarations added. Please re-run Example2.ps1.

We need to re-run the script because PowerShell isn’t great about changing the running script in memory. ;)

Stepper also checks other things. Suppose you forgot to wrap one of your steps in New-Step {}:

~/iCloud Drive/Downloads

PS7> ./Example2.ps1

[!] Non-resumable code detected in Example2.ps1.

This code will execute on every run of this script,

including resumed runs:

25: $Stepper.PersonalStorageUsage | Export-Excel .\PersonalStorageReport.xlsx

How would you like to handle this?

[W] Wrap in New-Step block (Default)

[M] Mark as expected to ignore this code on future script runs

[D] Delete this code

WARNING: Because this code references $Stepper variables,

deleting it may impact functionality.

[I] Ignore and continue

[Q] Quit

Choice? [W/m/d/i/q]:

Notice it tells you when $Stepper. variables are in use! Modifying these steps may impact functionality.

We press Enter or W to wrap the code and run again. Oh! Stepper catches we didn’t include Stop-Stepper:

Choice? [W/m/d/i/q]: W

Changes applied to non-resumable code. Please re-run Example2.ps1.

~/iCloud Drive/Downloads

PS7> ./Example2.ps1

[!] Script 'Example2.ps1' does not call Stop-Stepper.

Stop-Stepper ensures the state file is removed when the script completes successfully.

How would you like to proceed?

[A] Add 'Stop-Stepper' to the end of the script (Default)

[C] Continue without Stop-Stepper

[Q] Quit

Choice? [A/c/q]: A

Stop-Stepper added. Please re-run Example2.ps1.

Finally, we have a steppable script we can run and resume at will.

But what if you realize your script had a mistake in it and you need to change it? For example, what if this line:

$Stepper.PersonalStorageUsage = Get-PersonalStorage -Computer BigServer | Select-Object User, Path, LastBackup, PercentUsed

Should actually be:

$Stepper.PersonalStorageUsage = Get-PersonalStorage -Computer BigServer | Select-Object User, Path, LastBackupTime, PercentUsed

When you re-run the script, Stepper will inform you the script has changed and the results may be inconsistent!

~/iCloud Drive/Downloads

PS7> ./Example2.ps1

[!] Incomplete script run detected, but Example2.ps1 has been modified.

Total Steps: 4

Steps Completed: 3

Variables: NewUsers, PersonalStorageUsage

Last Activity: 2025-12-29 14:06:07

How would you like to proceed?

[R] Resume Example2.ps1 from Line 25 (May produce inconsistent results)

[S] Start over (Default)

[M] More details

[Q] Quit

Choice? [r/S/m/q]:

Now the default option is to Start Over!

Planned Features and Open Questions

Stepper isn’t done yet, for sure. It’s very usable as is, but it can always be improved. Here are some things I’ve been considering:

- If a script changes between runs, Stepper does not currently tell you what changed. It would be nice to include the actual changes when viewing More Details.

- Currently, all code between Steps is treated as a single code block. A more intelligent way to identify code blocks may be nice.

- I’d like to add a “watchdog” process that auto-resumes failed steps if they are marked for auto-resume.

- It would be nice to fully support the “Saga” design pattern to undo any changes made by failed steps, but that also seems like way bigger-brained stuff than what I currently do.

- Logging would be cool. Especially logging when things fail and why.

- Related to logging: my friend Mark Go suggested that Stepper could be used by folks new to PowerShell to help isolate where their scripts are failing. Maybe like a fancy try/catch!

- I have not tried nesting Steps to see what happens. Wrapping every single line in New-Step could be a fun way to track the progress of a script.

Conclusion

If you write scripts that have lots of steps, run for a long time, rely on other systems, rely on humans, or use tools that you don’t control, consider Stepper. I’m excited to have people other than me actually use it and tell me how it sucks!

As always, email me @ jake@dotdot.horse if you have anything to say, nice or otherwise!